Your guide to demystifying AI model sizes, from billion-parameter “brains” to the hardware that powers them—plus tips for running them yourself.

If you’ve seen names like GPT-4 175B, Llama-3 70B, or Grok-2 314B, you may wonder:

What does that “B” mean, and why should I care?

(Note: While some examples here are cloud-based for illustration, we’ll focus on locally runnable open-source models throughout.)

Don’t worry, it’s simpler than it sounds! Let’s break it down step by step.

What Even Is an AI Model?

Before we dive in, think of an AI model as a “digital brain” built from math and data. It’s like a super-smart program that learns from billions of examples (like books, websites, and conversations) to answer questions, write stories, or even code. Models like ChatGPT are just one type, these are called “language models” because they handle words and text. Remember: You don’t need to build one from scratch; you can just “run” (use) pre-made ones on your computer or online. If AI models were pets, small ones would be like energetic puppies, easy to handle, while big ones are like elephants… impressive, but good luck fitting one in your living room!

What Does the “B” Mean?

The “B” stands for billion parameters.

- 7B = 7 billion parameters

- 70B = 70 billion parameters

- 405B = 405 billion parameters

(Sometimes you’ll see “M” for million parameters in smaller models, like 125M = 125 million parameters, great for ultra-light setups.)

What are Parameters?

Think of parameters as tiny adjustable switches in an AI brain. When the AI trains, it tunes these switches to learn grammar, facts, reasoning, even coding. More parameters = more knowledge + ability to handle complex tasks. It’s like stuffing more toppings on a pizza, the more you add, the tastier (and messier) it gets, but too many and it won’t fit in the oven!

Analogy:

- Small brain (7B): quick and light, runs on a laptop. Like a bicycle, easy to use anywhere.

- Big brain (405B): very smart, but needs supercomputers to run. Like a rocket ship, powerful but requires a launchpad.

Tip: Parameters aren’t everything. A model with fewer parameters can still be “smarter” if it’s well-trained or specialized. Don’t chase size alone—focus on what the model is good at!

Does Bigger Always Mean Better? Not always!

Smaller models (7B–13B)

- Easier to run on home PCs or laptops.

- Great for chatting, small projects, and learning.

- Perk: They load fast and won’t crash your computer.

Larger models (70B–400B)

- Better at reasoning, coding, and complex problems.

- But they need massive hardware or cloud servers.

- Pitfall: Trying a huge model without the right setup can freeze your device—start small to avoid frustration! It’s like inviting a dinosaur to a tea party: amazing in theory, but your table might not survive.

A small instruction-tuned model (like Mistral 7B Instruct) can sometimes be more useful than a giant raw “base” model.

Model Name Tags Explained

When you see names like Llama-3-8B-Instruct, here’s what the extra words mean:

- Base: Raw model, just predicts text. Good for developers.

- Instruct / Chat: Tuned to follow instructions (best for everyday use).

- Code: Specialized for programming tasks.

Note: “Open-source” models (like Llama or Mistral) are free to download and tweak, like open recipes anyone can use. “Closed-source” ones are kept secret by companies, so you access them via apps. Open-source is great for experimenting freely.

Popular AI Models

Llama (by Meta)

- Open-source models: 8B, 70B, 405B.

- Free for developers and researchers.

Mistral (by Mistral AI)

- Efficient open models like 7B.

- Popular because they can run on personal devices.

2025 Trend: More models are becoming “multimodal” (handling images, audio, and text), like newer versions of Llama. If you’re just starting, stick to text-based ones to keep it simple.

How Do You Run These Models?

AI models must be loaded into memory before they can think. This is where hardware matters:

GPU: The AI’s Engine:

The GPU (graphics card) does the heavy math.

NVIDIA GPUs are the most popular because they support CUDA.

Analogy: A GPU is like a team of chefs in a kitchen—thousands of them working together to cook (process) AI tasks quickly. Without it, your CPU (computer’s main brain) does the work alone, like one slow chef.

Running on CPU Without a GPU: If you don’t have a GPU, you can still run very small models (like 7B or smaller) using just your CPU. Tools like Ollama support CPU-only mode, but expect much slower performance, responses might take minutes instead of seconds. It’s a good option for basic testing on low-end hardware, though larger models become impractical without a GPU due to speed and memory limits.

CUDA in Simple Words:

CUDA = translator between AI models and the GPU.

It lets the GPU’s thousands of tiny processors work together to run AI models super fast.

Without CUDA, running large models would be very slow.

Fact: CUDA is mostly for NVIDIA cards. If you have AMD or Intel, look for alternatives like ROCm, but NVIDIA is easiest to start with. For Apple users, the M-series chips (like M1, M2, M3, or M4) have built-in AI acceleration via the Neural Engine, allowing efficient local model running on Macs without needing a discrete GPU—tools like Ollama or LM Studio make it seamless.

Rough Hardware Guide

- 7B–8B Models: Need 8–16 GB VRAM. Example GPUs: NVIDIA RTX 3060 or 3070.

- 13B Models: Need 16–24 GB VRAM. NVIDIA RTX 3080 or 3090.

- 30B–34B Models: Need 24–48 GB VRAM. NVIDIA RTX 4090.

- 70B+ Models: Need 48 GB+ VRAM, often 100+ GB. Server-grade like NVIDIA A100 or H100.

Tricks to help:

- Quantization : compresses the model to use less memory.

- Offloading : shifts some work from GPU to RAM/CPU (slower, but works).

Warning: Always check your computer’s specs first (search “how to check GPU VRAM” online). Overloading can overheat your device take breaks! Your laptop might start sounding like a jet engine—time to give it a vacation.

Important Extra Concepts

Training vs Using:

- Training = teaching the model (done by big companies with supercomputers).

- Using/Running = loading a finished model on your computer. Most people only run models.

Context Window:

- Like the AI’s “short-term memory.”

- Decides how much text it can read/remember at once (e.g., 4k: short stories, 128k: full books).

- Related Term: Tokens: AI “words.” Text is broken into tokens (e.g., “hello” might be one token), which are like bite-sized pieces of language that the model processes. A bigger context window means more tokens fit, so the AI remembers longer conversations. For example, the phrase “Hello What is AI?” typically breaks down into about 5 tokens in common models like Llama or Mistral (e.g., [‘Hello’, ‘ What’, ‘ is’, ‘ AI’, ‘?’]—exact count can vary slightly by tokenizer). Tools like Hugging Face’s tokenizer demo can help you check any text.

Token Speed in LLMs: This measures how fast a model processes or generates tokens, often in tokens per second (TPS). Local setups with good GPUs can hit 20–100+ TPS for small models, making responses quick; slower hardware drops to 5–20 TPS. Bigger models or CPU-only runs are slower, so optimize for speed if you need fast answers.

Token Charges in Cloud: Services like OpenAI or Grok charge based on tokens used (e.g., $0.01–$0.10 per 1000 tokens for input + output). A simple query might cost, but long sessions add up—check pricing to avoid surprises!

Fine-Tuning

- Like giving the model extra lessons on a specific topic (e.g., making a base model better at math). Try this with tools like Hugging Face, but start with pre-tuned models.

Safety & Guardrails

- Instruct models often include safety filters to block harmful outputs.

- Open-source ones (like Llama) give more flexibility, but need responsible use.

Ethics Tip: Always think about privacy—don’t share sensitive info. AI can make mistakes or biases, so double-check facts. Use it for good, like learning or fun projects!

Why Small Models Matter

- Great for offline use, privacy, and mobile/edge devices.

- Bonus: They use less energy, which is kinder to the environment (big models can guzzle electricity like a small city).

Tips

- Start with a 7B–13B instruct model: fast, friendly, and easy to run.

- Use tools like Ollama or LM Studio: simple setup for local models (free and user-friendly).

- Cloud: If you don’t have a good GPU, try cloud options (ChatGPT, Hugging Face Spaces, Google Colab).

- Common Mistakes to Avoid: Ignoring hardware limits (test with small models first), expecting perfect answers (AI hallucinates sometimes—verify info), or forgetting updates (models improve over time; check for new versions).

- Free Resources: Join communities like Reddit’s r/MachineLearning or Hugging Face forums for help. Experiment safely—start with prompts like “Explain photosynthesis simply.”

https://www.reddit.com/r/MachineLearning/

https://discuss.huggingface.co - Remember: Pick the right size for your hardware and needs.

Key Takeaway

- “B” = billion parameters = AI brain size.

- NVIDIA GPUs + CUDA = the engine that makes models run fast.

- Small models = easier to use.

- Big models = smarter, but need huge hardware.

- Start small, experiment, and grow as you learn. Have fun—AI is an exciting world!

Useful AI Model Lists

Here’s a category-wise, locally-runnable list of the best AI models, focused on open-source and widely deployable options, including embedding models:

- General-Purpose LLMs (for chat, writing, general tasks):

- Meta LLaMA 3 (e.g., 8B, 70B) – Open, strong across tasks, community favorite.

- Mistral 7B – Apache-licensed, fast, efficient, great for local machines.

- Yi (01.ai) (e.g., 6B, 34B) – High quality, multilingual, popular in China and globally.

- Qwen 3 (Alibaba) (e.g., 1.8B, 7B, 72B) – Multilingual, strong performance, large family of models.

- Gemma (Google) (2B, 7B, 27B) – Modern, efficient, well-optimized for local use.

- Falcon (TII UAE) (7B, 40B) – Open, strong, large models available.

- Baichuan (e.g., 7B, 13B) – High-quality open models from China.

- SOLAR (Upstage) (10.7B) – Efficient, strong on Korean and English.

- Orca 2 (Microsoft) (7B, 13B) – Fine-tuned for reasoning, good for research.

2. Coding & Developer LLMs

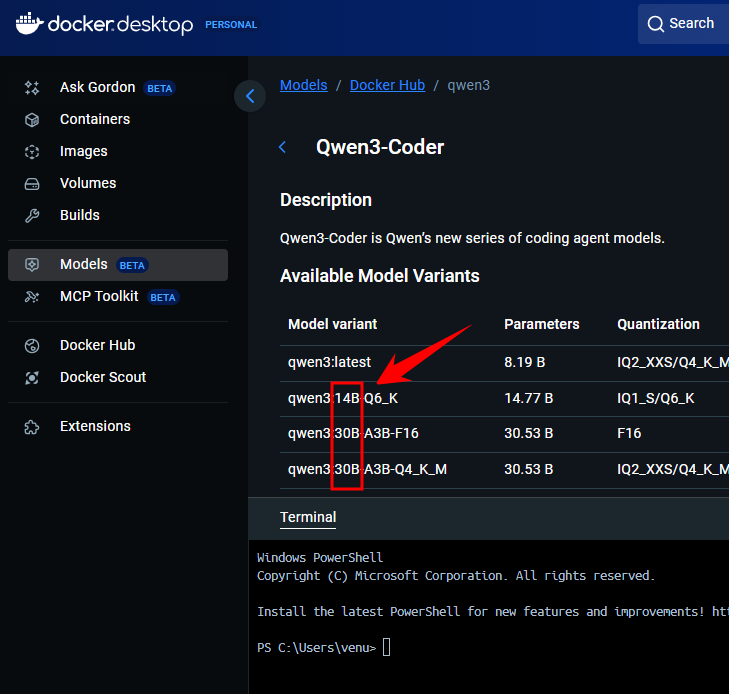

- Qwen 2.5 Coder (7B, 14B, 32B) – State-of-the-art open coding model, supports many languages.

- Code Llama (Meta) (7B, 13B, 34B, 70B) – Specialized for coding, widely used.

- DeepSeek Coder (6.7B, 33B) – Strong on code generation and understanding.

- WizardCoder (15B, 34B) – Popular, good for code synthesis.

- StarCoder2 (7B, 15B) – Open, strong for code autocomplete.

- aiXcoder – Lightweight, Python/Java focused.

- Granite Code (IBM) (8B, 20B, 34B) – IBM’s modern coding LLM.

- SQLCoder (Defog) (7B, 15B) – Specialized for SQL.

3. Reasoning, Math & Science LLMs

- QwQ-32B (Alibaba) – Strong on mathematical reasoning.

- DeepSeek Math/DeepSeek R (7B, 14B, 32B, 70B) – State-of-the-art math reasoning.

- OpenMath (Meta) (7B, 70B) – Focused on advanced scientific reasoning.

- InternLM-Math (7B, 20B) – Coding and math emphasis.

- GLM-4 (THUDM) (32B) – Modern science and reasoning model.

4. Multilingual LLMs

- Qwen 2.5 (7B, 14B, 32B, 72B) – Excellent multilingual support, especially for East Asian languages.

- BLOOMZ (176B) – Massive, supports many languages, requires heavy hardware.

5. Vision & Multimodal Models

- LLaVA (7B, 13B) – Open, chat with images.

- Qwen-VL / VL-Chat (32B, 72B) – Advanced vision-language models.

- Gemma Vision (2B, 7B) – Google’s vision-language model.

6. Function Calling & Tool Use

- Gorilla (7B) – Open, function/tool calling.

- NexusRaven (13B) – Tool use and API calling.

- ToolLlama (7B) – Tool integration.

7. Audio/Speech Models

- Whisper (OpenAI) – Speech-to-text, multiple sizes.

- SpeechT5 (Microsoft) – Text-to-speech.

8. Embedding Models (for search, retrieval, semantic tasks)

- BAAI/bge-base/large (110M, 335M params) – Best open embedding models for English.

- intfloat/e5-base/large (110M, 335M params) – Great for retrieval and semantic search.

- sentence-transformers/all-MiniLM-L6-v2 (22M params) – Ultra-lightweight, fast.

- nomic-ai/nomic-embed-text-v1 (~500M params) – Modern, strong performance.

- Alibaba-NLP/gte-Qwen2-7B/1.5B (1.5B, 7B params) – Large, multilingual embeddings.

- jinaai/jina-embeddings-v2-base-code (0.1B params) – Optimized for code search.

Thanks for reading!

One response to “AI Model Basics: Understanding Size, Hardware, and Setup”

[…] Read my previous article about Basics:AI Model Basics – Understanding Size, Hardware and Setup: https://wisecodes.venuthomas.in/2025/08/24/ai-model-basics-understanding-size-hardware-and-setup/ […]